Welcome to the third newsletter prepared by the EAGE A.I. Committee this year. As a group of EAGE members and volunteers they help you navigate the digital world and find the bits that are most relevant to geoscientists.

You are welcome to join EAGE or renew your membership to support the work of the EAGE A.I. Community and access all the benefits offered by the Association.

EAGE Membership Benefits: Join or Renew

Curious to know all EAGE is doing for the digital transformation?

Visit the EAGE Digitalization Hub

![]()

![]() What: GTC 2022 Keynote by NVIDIA CEO Jensen Huang. An hour-long event packed with technologies that will drive research and innovation over the next couple of years.

What: GTC 2022 Keynote by NVIDIA CEO Jensen Huang. An hour-long event packed with technologies that will drive research and innovation over the next couple of years.

![]() Why this is useful: Undoubtedly, graphics processing units(GPU) built the hardware backbone of the ongoing AI revolution. Thus, it is worth keeping an eye on the state of cutting-edge technology. This year NVIDIA introduced the new Hopper GPU architecture and Grace CPU superchip which deliver an order of magnitude performance leap over its predecessors. The Omniverse is a platform that enables real-time 3D design collaboration coupled with physics simulations which ultimately leads to the shaping of digital twins of real-world objects. The keynote introduces many more things so it is definitely worth watching.

Why this is useful: Undoubtedly, graphics processing units(GPU) built the hardware backbone of the ongoing AI revolution. Thus, it is worth keeping an eye on the state of cutting-edge technology. This year NVIDIA introduced the new Hopper GPU architecture and Grace CPU superchip which deliver an order of magnitude performance leap over its predecessors. The Omniverse is a platform that enables real-time 3D design collaboration coupled with physics simulations which ultimately leads to the shaping of digital twins of real-world objects. The keynote introduces many more things so it is definitely worth watching.

Image source: GTC 2022 Keynote

![]()

![]()

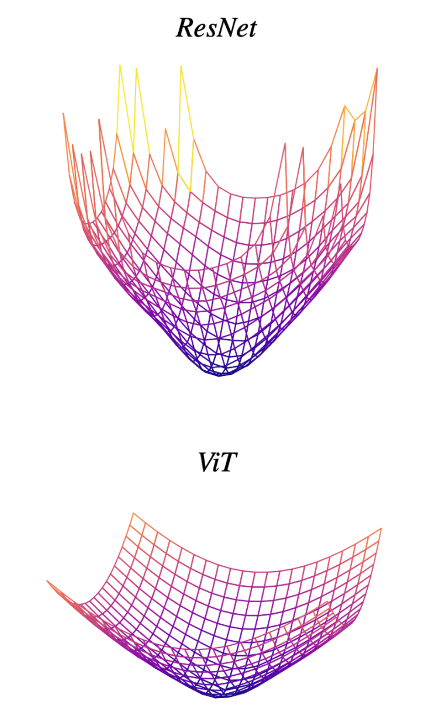

What: “How do vision transformers work? (ICLR 2022 Spotlight)”

What: “How do vision transformers work? (ICLR 2022 Spotlight)”

The success of deep learning models using the multi-head self-attention mechanism (MSA) is indisputable. In this work, the authors investigate the reasons behind the strengths and weaknesses of MSA and compare the MSA with convolutions.

![]() Why this is useful: It is commonly known that the transformer models often outperform convolutional models when trained on large datasets. Is there a reason behind the need for the large dataset size and why do convolutional nets learn faster? This paper seeks to answer these questions and shows that MSAs improve not only accuracy but also generalization by flattening the loss landscapes. The authors also show that convolutions serve as high-pass filters for the input data while MSAs are low-pass filters, thus complementing each other. Specifically, the authors outperform the baseline by replacing some convolutions with MSAs in a common convolutional network.

Why this is useful: It is commonly known that the transformer models often outperform convolutional models when trained on large datasets. Is there a reason behind the need for the large dataset size and why do convolutional nets learn faster? This paper seeks to answer these questions and shows that MSAs improve not only accuracy but also generalization by flattening the loss landscapes. The authors also show that convolutions serve as high-pass filters for the input data while MSAs are low-pass filters, thus complementing each other. Specifically, the authors outperform the baseline by replacing some convolutions with MSAs in a common convolutional network.

Image source: How Do Vision Transformers Work?, Namuk Park, Songkuk Kim, arXiv:2202.06709

![]()

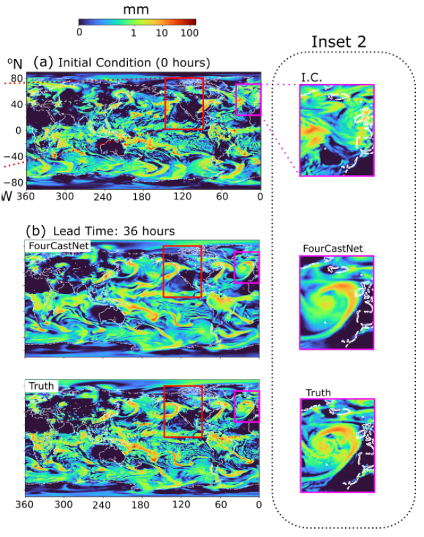

![]() What: A Global Data-driven High-resolution Weather Model using Adaptive Fourier Neural Operators, by Jaideep Pathak et al, 2022. The authors use a neural network instead of classic numerical methods to accelerate high-resolution Earth-scale modeling.

What: A Global Data-driven High-resolution Weather Model using Adaptive Fourier Neural Operators, by Jaideep Pathak et al, 2022. The authors use a neural network instead of classic numerical methods to accelerate high-resolution Earth-scale modeling.

![]()

Why this is useful:Moving from a toy example to a large-scale application is a common problem in the world of numerical simulations. This is the problem the authors aim to address by substituting classical numerical methods with an artificial neural network for modeling the atmosphere. The paper introduces the FourCastNet, short for Fourier Forecasting Neural Network, which is a global data-driven weather forecasting model that provides accurate short to medium-range global predictions. The architecture is the Adaptive Fourier Neural Operator model (AFNO) which combines the Fourier Neural Operator (FNO) learning approach with a vision transformer backbone. This work is a significant step toward building a reliable high-resolution digital twin of Earth for climate extremes.

Why this is useful:Moving from a toy example to a large-scale application is a common problem in the world of numerical simulations. This is the problem the authors aim to address by substituting classical numerical methods with an artificial neural network for modeling the atmosphere. The paper introduces the FourCastNet, short for Fourier Forecasting Neural Network, which is a global data-driven weather forecasting model that provides accurate short to medium-range global predictions. The architecture is the Adaptive Fourier Neural Operator model (AFNO) which combines the Fourier Neural Operator (FNO) learning approach with a vision transformer backbone. This work is a significant step toward building a reliable high-resolution digital twin of Earth for climate extremes.

Image source: FourCastNet: A Global Data-driven High-resolution Weather Model using Adaptive Fourier Neural Operators, Jaideep Pathak et al., arXiv:2202.11214

![]()

![]() Why this is useful:

Why this is useful:

The interpretation process has drawbacks due to the large volume of data, its complexity, time consumption, and the uncertainty introduced by the professionals’ effort. Unsupervised machine learning models, which find underlying patterns in data, can represent a new way for an accurate interpretation without any reference or label, hence removing human bias. The authors recommend using the unsupervised clustering method on seismic and well-log data separately. Following that, defining the relationship between well-logs, including lithologic information and seismic data, can provide more information-rich data for interpretation. The seismic groups that were formed from field data accurately described the main seismic facies and matched well with the groups that were formed from well log data.

Link to the paper review on YouTube.

Credit: Ruslan Miftakhov – AI Research, Unsupervised Machine Learning Towards an Automated Seismic Interpretation Tool | Paper Review

![]()

![]()

What: GitHub with First break picking code

![]() Why this is useful:

Why this is useful:

The travel time of the first arrival is useful for a variety of applications, including tomographic static corrections, source location, velocity inversion, and others. Of course, because the reflected signal is heavily influenced by the acquisition, subsurface structures, and signal-to-noise ratio, it cannot be fully automated by traditional techniques. As a result, pickings with significant static corrections, low energy, low signal-to-noise ratios, and abrupt phase changes necessitate intense human involvement.

Deep learning can assist in first arrival picking on field seismic data. If you want to train your own first break picking solution based on U-Net segmentation, go to Aleksei Tarasov’s GitHub account. He has provided a complete training code, a pipeline for generating synthetic data, and a well-written summary.

![]()

Discover EAGE Learning Resources on A.I. and machine learning

Third EAGE Workshop on HPC in Americas

This newsletter is edited by the EAGE A.I. Committee.

| Name | Company / Institution | Country |

|---|---|---|

| Anna Dubovik | WAIW | United Arab Emirates |

| Jan H. van de Mortel | Independent | Netherlands |

| Jing Sun | TU Delft | Netherlands |

| Julio Cárdenas | Géolithe | France |

| George Ghon | Capgemini | Norway |

| Lukas Mosser | Aker BP | Norway |

| Oleg Ovcharenko | NVIDIA | United Arab Emirates |

| Nicole Grobys | DGMK | Germany |

| Roderick Perez | OMV | Austria |

| Surender Manral | Schlumberger | Norway |

| Yohanes Nuwara | Aker BP | Norway |